This story spans several years between 2010 and 2014. It was sitting in my drafts folder for over a decade, so some links may be dead and the current status of various people or companies or science may no longer be accurate.

Here’s the short version…

- My lab got a drug from a company via a material transfer agreement (MTA)

- We found some really cool results

- We tried to publish results

- The company tried to sue us

- The company went bankrupt

- We published the paper anyway

- Rival paper from former company employees came out, doesn’t cite us

Background on Conflict of Interest in Basic Science

For life scientists in academia, one of the most common examples of potential conflict-of-interest (COI) is receiving resources from a drug company to do a research project. Sometimes this takes the form of money directly intended to fund the research. Other times the reward can be a position on the scientific advisory board of the company, or being granted access to rare chemicals/reagents that are not commercially available to everyone. In the US, such support is usually required to be disclosed both to the employing University, and federal or other funding agencies.

Usually when the resource being shared is a new drug, companies like to control what happens to the material, such as whether/how the academics can discuss the results with others, including publication. Such legal matters are usually dealt with via Confidentiality/Non-Disclosure Agreements (CNDAs) and Material Transfer Agreements (MTAs). These documents are drafted up jointly between the lawyers in the company and the University’s Technology Transfer Office. Herein, I discuss an example of such an agreement gone awry….

The research that led us to request an MTA for a new drug

My lab’ has a long-standing interest in mitochondrial K+ channels, and in our work we’ve often used a drug called NS1619, which is reported to specifically activate mitochondrial KCa channels. The nomenclature is a bit odd here… a family of channels is encoded by the Slo genes, of which there are 4 isotypes in mammals (Slo1, Slo2.1, Slo2.2, Slo3). The channel proteins are also called “BK” channels, since they are large conductance (big) K+ channels. They’re also often referred to as KCa channels, even though the Slo2 variants are not Ca2+ activated (they’re Na+ activated, so KNa channels). There are also some hybrid terms such as BKCa, or mBK (mitochondrial BK). Anyway, the important thing is these channels are thought to be important for protecting the heart against ischemia-reperfusion (IR) injury, and the debate mainly centers on which isotype is present in mitochondria and responsible for these effects. Other people think it’s Slo1 but our results suggest otherwise.

NS1619 was originally made by a Danish company called Neurosearch (archived link from 2019 shortly before site went dead), but is readily available from chemical suppliers such as Sigma. However, over decade or so since the drug was made available, it emerged that NS1619 might have non-specific side effects in mitochondria. So, interest in the field shifted toward a related newer compound, NS11021, reported to be more potent and specific for the mito’ channel. NS11021 is not commercially available, so in late 2011 we wrote to Neurosearch to obtain some. Following a few rounds of back-and-forth between lawyers, an MTA was executed and shortly afterward we were sent an aliquot of NS11021. The scientist within the company who liaised with us, Morten Grunnet, was collaborative and very open to discussing experimental details and results. I consider him a colleague and friend to this day, and he was a co-author on our paper that came out of this work.

Although publishing the entire MTA here would be inappropriate, below I want to highlight specifically the part describing the work to be performed in my lab using the drug. Note the mention of potential use of knockout mice for various types of K+ channel…

Appendix A: Study Protocol. The overall direction of the research project, which the study will be part of, is the identification of K+ channels in mitochondria, which are involved in cardioprotection by ischemic or anesthetic preconditioning. The aim of the study would be to investigate the role of the BK channel in anesthetic preconditioning using FVB mice. In Langendorff perfused rat hearts, the volatile anesthetic isoflurane provides protection against ischemia-reperfusion injury in a manner that is sensitive to the BK channel inhibitor paxilline. University of Rochester would like to determine if this manner of isoflurane mediated protection can be mimicked by the administration of the specific BK channel activator NS11021. University of Rochester would also like to look at the effect of NS11021 on BK channel activity in isolated mitochondria, using novel thallium-flux assay we recently published (PMID:20185796, PDF attached). None of the K+ channels in the mitochondrial membrane have been identified at the molecular genetic level. Knockout mice for various types of KCa and KATP channels may be available to us in future, and knowing which channel to look for could be greatly facilitated by the use of highly specific pharmacologic reagents.

So, we did some experiments and the data looked great. Without getting into details (all of which can be found in our published paper on this topic), the key finding was that NS11021 protected hearts against IR injury, and this effect was lost in Slo1-/- mice. Furthermore, the effect was likely mediated not by Slo1 channels inside mitochondria of cardiomyocytes, but instead by channels in intrinsic cardiac neurons.

Things get nasty

You might think if a company was touting a drug as acting on a specific target, and someone came along with data showing the effect of the drug disappears in a knockout mouse for the target, they’d be happy? Not Neurosearch, they lawyered-up and tried to stop us from publishing. They essentially said that if we attempted to publish, it would be a breach of the MTA, and they would sue for breach of contract.

Backing up for a second, during the time we were collecting our data and preparing our manuscript, we were in regular discussion with Dr. Grunnet, who was essentially our contact within the company. However, in the summer of 2012 he left Neurosearch to work for a different Danish Biotech, Lundbeck. We continued communicating while the manuscript went through several iterations, then in June 2012 we heard via Dr. Grunnet that Neurosearch were “rather reluctant to let us publish since they claim that the part including KO mice is not covered by the MTA”. Also “they have decided to perform a small study on 11021 in WT and BK KO mice with I/R in Langendorff. The primary aim is to confirm BK selectivity of 11021 and they are aiming at a small publication”. The suggested solution was that we hold off until their study was published. In other words, the company scientists didn’t want to get scooped!

Given our study was complete and ready to publish, and theirs had barely even begun, we tried explaining that it might be better to simply add the interested parties onto our paper as co-authors. In my view this was an outreach effort that went above and beyond necessary collegiality (remember – they were trying to sue us). However, we were then contacted by Søren-Peter Olesen who oversaw Neurosearch’s collaboration with the University of Copenhagen, and also holds an academic appointment there.

In the remainder of fall 2012, while our paper was being put through the wringer by journal reviewers, a conversation ensued between myself, Dr. Olesen, and the Tech’ Transfer lawyers here in Rochester. This culminated in an email from Dr. Olesen in November, stating “Neurosearch will then close the matter and conclude that you do not want any legal permission to publish on NS11021 in relation to transgenic animals”.

We had the University lawyers look over the MTA again, and they made two important conclusions… First, the language was sufficiently broad regarding knockout mice that in their view we hadn’t breached the terms. Second (and more important) the company had no right to bar us from publishing because the MTA itself made no mention of a right-of-veto on publications. The MTA simply requested we send a copy of any manuscript to Neurosearch 30 days before journal submission, for them to review and comment. We did this, and in-fact our company point-person had been kept in the loop from the earliest stages, so Neurosearch knew about these knockout mouse studies for almost a year before the paper was submitted.

Neurosearch goes kaput

Eventually, after 4 journals and 8 months of review/reject/resubmit, we got our paper published in Peer J in February 2013. But then something strange happened… Neurosearch went belly up. Well, technically the terms used were restructuring, transfer of assets, prosecution for share price manipulation. So, the company that threatened to block us from publishing no longer exists. Another company trading under the same name and with new management did emerge from the ashes of the old Neurosearch, but disappeared in 2019.

What about the company-backed study?

Olesen and colleagues finally published their version of the story in PLoS One, in collaboration with a group from the University of Tübingen. Although the new paper cites our work, there are a number of problems with it…

- The paper purports to show patch-clamp data of BK channel activity in mitoplasts (isolated mitochondrial inner membranes). The problem is, they used a “Port-a-Patch” system from NanION. This system works by using a vacuum to pull down a spherical object (ideally a mitoplast) onto a pre-formed patch pipet, with no microscope or any other confirmation that what you’re actually patching IS a mitoplast. The method depends on the purity of the “soup” you put into the chamber. We tested one of these systems in my lab in 2012 and determined it was useless for mitoplasts. Any contamination with other membrane fragments gave false readings, and notably the PLoS paper contains no information on the purity of preparations used for patching. The image of mitoplasts in Figure S1 shows a lot of membrane fragments apart from mitoplasts.

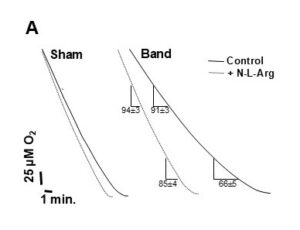

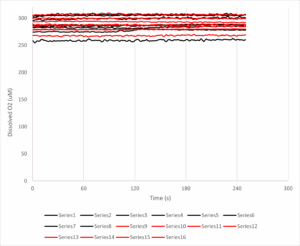

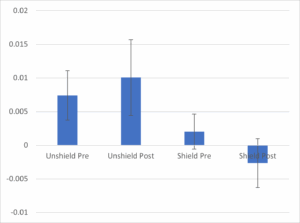

- The IR injury data concludes that hearts from Slo1 KO mice cannot be protected by ischemic preconditioning. This experiment is completely opposite to our published findings. No attempt is made to explain this (remember, they don’t cite us) but here are some suggestions… we did everything in male mice, on a single genetic background (FVB), whereas they used both male and female mice in a mixed background (Sv129/C57BL/6). We measured cardiac function with a pressure balloon, but they only measured heart rate, so did not have any functional data for the heart perfusions. They had a 4 minute delay on ice between heart extraction and perfusion. The mouse heart is exquisitely temperature sensitive, and our delay is typically <30s. with no ice. Any longer and contractility is compromised. In effect, they may have been looking at hearts with drastically compromised function, before any IR injury.

- We use a constant flow system so the heart is always sufficiently oxygenated and function is not affected by coronary vascular tone. The Lukowski study used constant pressure in which coronary flow (O2 delivery) is affected by vascular tone. They showed that IPC improved post-IR coronary flow, and this was absent in BK knockouts. It cannot be ruled out that the knockout mice had compromised post-IR coronary flow. This links the effects of BK in IPC to coronary vasculature, NOT mitochondrial channels inside cardiomyocytes.

- The dose of NS11021 used in the patch-clamp studies is very high (10 μM). Previously we showed 50 nM could activate a paxilline sensitive K+ channel in mitochondria. The PLoS paper claims that their patch data complement findings of another paper touting Slo1 as a mito’ BK channel, but that paper didn’t show any patch data.

Overall not a good situation. Sufficient time has passed since these events that it’s probably OK to talk about it now. Olesen is retired/emeritus. The company that NS sold some of its IP/assets to is still around, but has yet to bring a single product to market based on the Neurosearch drugs. The lawyers involved have all moved on in their careers. My lab no longer does much work on mitochondrial ion channels (but we do have some unpublished data). People are still publishing on NS11021, and would I doubt that any of the more recent folks using this molecule are aware of the above troubles.